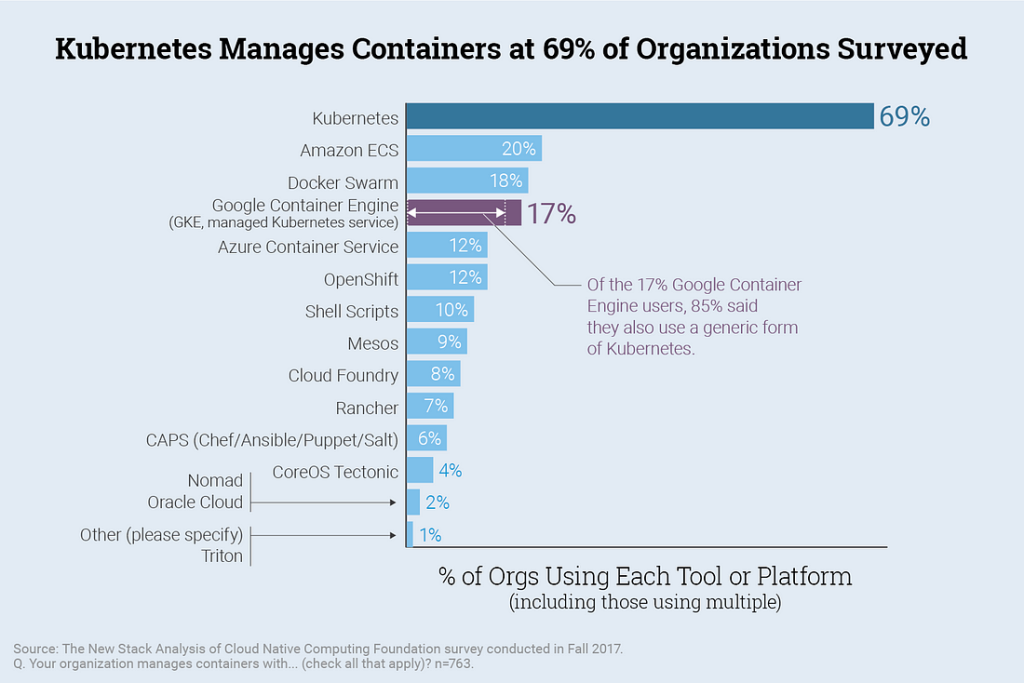

When Kubernetes made its foray as an open-source tool for container orchestration in the year 2014, its meteoric rise was unprecedented. This was a time when Containerization had just become popular for application deployment but there were concerns about High Availability and Disaster recovery solutions. Kubernetes, also called K8S, not just solved this problem, it went a step further.

This was a tool that took care of the entire production lifecycle. It featured on-the-fly application deployment, Load-balancing, Auto-scaling and health checks with High Availability. It’s not a surprise that it became the market leader in no time.

All major cloud providers today provide a Managed-Kubernetes cluster as a service. They have made it far easier to create the infrastructure with just a few clicks and deploy the application

EKS, the hero of our story:

Amazon Elastic Kubernetes Service is the managed Kubernetes service offering by AWS. The most striking feature of EKS is the ease which it offers in creating the Kubernetes Cluster and provisioning the required resources. All we needed here was a cogent strategy and the power of AWS Cloud.

The picture below is a perfect representation of exactly how simple it is to work with EKS.

The following is one such implementation of deploying our client’s application on Amazon EKS. The application was a Learning Management System (LMS) and our client was based in California, USA. It was a php Application and the client wanted a deployment that did not involve downtime during updates. They had an existing RDS database around which we were to build the entire architecture. This is how we went about it.

- Overall design diagram

2. Creating EKS cluster

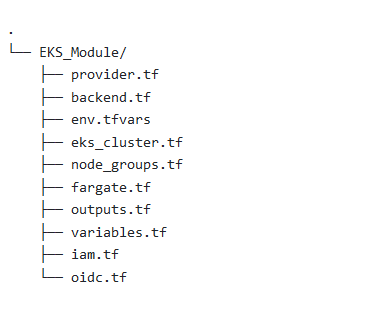

Our preferred tool for managing the complete lifespan of infrastructure utilizing infrastructure as code was Terraform.

An Open-Source, Cloud Agnostic tool from HashiCorp, Terraform is growing by leaps and bounds in terms of user base. It allows us to provision Cloud resources with code and makes it easier to orchestrate the infrastructure. Being platform-agnostic, It supports all major Cloud providers. This essentially, is what sets it apart from its competitors like AWS Cloudformation, Azure Resource Manager or Cloud Deployment Manager of GCP.

Install Terraform with Chocolatey on Windows machine:

choco install terraform

Once installed, we have to initialize Terraform:

terraform init

The following is the module which we created on Terraform and Provisioned the EKS Cluster.

provider "aws" {

region = var.region

#profile = "default"#

access_key = "my-access-key"

secret_key = "my-secret-key"

}

terraform {

backend "s3" {

bucket = "s3test.bucket25" #Bucket name here##

key = "test/terraform.tfstate"

region = "us-east-1"

}

}

region = "us-east-1"

#-----------------------------------------------------------

# AWS EKS cluster

#-----------------------------------------------------------

enable = true

eks_cluster_name = "test_cluster"

eks_cluster_enabled_cluster_log_types = ["api", "audit", "authenticator", "controllerManager", "scheduler"]

eks_cluster_version = null

#vpc_config

subnets = ["",""]

cluster_security_group_id = null

cluster_endpoint_public_access = true

cluster_endpoint_public_access_cidrs = ["0.0.0.0/0"]

cluster_endpoint_private_access = false

#timeout

cluster_create_timeout = "30m"

cluster_delete_timeout = "15m"

cluster_update_timeout = "60m"

#---------------------------------------------------

# commantags variable

#---------------------------------------------------

name = "test"

enviroment = "test"

application = "test"

support = "Cloudbuilders"

creater = "CloudBuilders"

#---------------------------------------------------

# AWS EKS node group

#---------------------------------------------------

enable_eks_node_group = true

eks_node_group_node_group_name = "test_nodegroup"

eks_node_group_subnet_ids = ["",""]

#Scaling Config

max_size = "3"

desired_size = "2"

min_size = "2"

eks_node_group_ami_type = "AL2_x86_64"

eks_node_group_disk_size = "20"

eks_node_group_force_update_version = null

eks_node_group_instance_types = ["t3.medium"]

eks_node_group_release_version = null

eks_node_group_version = null

#---------------------------------------------------

# IAM

#---------------------------------------------------

permissions_boundary = null

create_eks_service_role = true

#---------------------------------------------------

# OIDC

#---------------------------------------------------

client_id = "sts.amazonaws.com"

#--------------------------------------------------------

#

#------------------------------------------------------------

fargate_subnet = ["",""]

resource "aws_eks_cluster" "eks_cluster" {

# count = var.enable ? 1 : 0 #

name = var.eks_cluster_name != "" ? lower(var.eks_cluster_name) : "${lower(var.name)}-eks-${lower(var.enviroment)}"

role_arn = element(concat(aws_iam_role.cluster.*.arn, [""]), 0)

enabled_cluster_log_types = var.eks_cluster_enabled_cluster_log_types

version = var.eks_cluster_version

tags = module.tags.commantags

vpc_config {

security_group_ids = var.cluster_security_group_id

subnet_ids = var.subnets

endpoint_private_access = var.cluster_endpoint_private_access

endpoint_public_access = var.cluster_endpoint_public_access

public_access_cidrs = var.cluster_endpoint_public_access_cidrs

}

timeouts {

create = var.cluster_create_timeout

delete = var.cluster_delete_timeout

update = var.cluster_update_timeout

}

lifecycle {

create_before_destroy = true

ignore_changes = []

}

depends_on = []

}

#comman tags

module "tags" {

source = "../comman_tags"

name = var.name

enviroment = var.enviroment

application = var.application

support = var.support

creater =var.creater

}

resource "aws_eks_node_group" "eks_node_group" {

count = var.enable_eks_node_group ? 1 : 0

cluster_name = var.eks_cluster_name != "" ? lower(var.eks_cluster_name) : "${lower(var.name)}-eks-${lower(var.enviroment)}"

node_group_name = var.eks_node_group_node_group_name != "" ? lower(var.eks_node_group_node_group_name) : "${lower(var.name)}-node-group-${lower(var.enviroment)}"

node_role_arn = element(concat(aws_iam_role.node.*.arn, [""]), 0)

subnet_ids = var.eks_node_group_subnet_ids

tags = module.tags.commantags

scaling_config {

desired_size = var.desired_size

max_size = var.max_size

min_size = var.min_size

}

ami_type = var.eks_node_group_ami_type

// capacity_type = var.eks_node_group_capacity_type

disk_size = var.eks_node_group_disk_size

force_update_version = var.eks_node_group_force_update_version

instance_types = var.eks_node_group_instance_types

release_version = var.eks_node_group_release_version

version = var.eks_node_group_version

lifecycle {

create_before_destroy = true

ignore_changes = []

}

depends_on = [

aws_eks_cluster.eks_cluster

]

}

resource "aws_eks_fargate_profile" "Fargate_test_cluster" {

cluster_name = var.eks_cluster_name

fargate_profile_name = "fargate_profile"

pod_execution_role_arn = "pod_execution_role"

subnet_ids = ["","e"]

selector {

namespace = "test-cluster-pod-namespace1"

}

}

# IAM

# CLUSTER

resource "aws_iam_role" "cluster" {

count = var.create_eks_service_role ? 1 : 0

name = "${var.eks_cluster_name}-eks-cluster-role"

tags = module.tags.commantags

permissions_boundary = var.permissions_boundary

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "eks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "cluster-AmazonEKSClusterPolicy" {

count = var.create_eks_service_role ? 1 : 0

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = element(concat(aws_iam_role.cluster.*.name, [""]), 0)

}

resource "aws_iam_role_policy_attachment" "cluster-AmazonEKSServicePolicy" {

count = var.create_eks_service_role ? 1 : 0

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSServicePolicy"

role = element(concat(aws_iam_role.cluster.*.name, [""]), 0)

}

# NODES

resource "aws_iam_role" "node" {

count = var.create_eks_service_role ? 1 : 0

name = "${var.eks_cluster_name}-eks-node-role"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "node-AmazonEKSWorkerNodePolicy" {

count = var.create_eks_service_role ? 1 : 0

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

role = element(concat(aws_iam_role.node.*.name, [""]), 0)

}

resource "aws_iam_role_policy_attachment" "node-AmazonEKS_CNI_Policy" {

count = var.create_eks_service_role ? 1 : 0

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = element(concat(aws_iam_role.node.*.name, [""]), 0)

}

resource "aws_iam_role_policy_attachment" "node-AmazonEC2ContainerRegistryReadOnly" {

count = var.create_eks_service_role ? 1 : 0

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = element(concat(aws_iam_role.node.*.name, [""]), 0)

}

/* Commented until access provided to perform "iam:AddRoleToInstanceProfile" */

resource "aws_iam_instance_profile" "node" {

name = "${var.eks_cluster_name}-eks-node-instance-profile"

role = element(concat(aws_iam_role.node.*.name, [""]), 0)

}

data "tls_certificate" "eks_cluster" {

url = aws_eks_cluster.eks_cluster.identity[0].oidc[0].issuer

}

resource "aws_iam_openid_connect_provider" "oidc" {

url = aws_eks_cluster.eks_cluster.identity[0].oidc[0].issuer

client_id_list = [var.client_id]

thumbprint_list = [data.tls_certificate.eks_cluster.certificates[0].sha1_fingerprint]

depends_on = [aws_eks_cluster.eks_cluster]

}

#---------------------------------------------------

# AWS EKS cluster

#---------------------------------------------------

output "eks_cluster_id" {

description = "The name of the cluster."

value = element(concat(aws_eks_cluster.eks_cluster.*.id, [""], ), 0)

}

output "eks_cluster_arn" {

description = "The Amazon Resource Name (ARN) of the cluster."

value = element(concat(aws_eks_cluster.eks_cluster.*.arn, [""], ), 0)

}

output "eks_cluster_endpoint" {

description = "The endpoint for your Kubernetes API server."

value = concat(aws_eks_cluster.eks_cluster.*.endpoint, [""], )

}

output "eks_cluster_identity" {

description = "Nested attribute containing identity provider information for your cluster. Only available on Kubernetes version 1.13 and 1.14 clusters created or upgraded on or after September 3, 2019."

value = concat(aws_eks_cluster.eks_cluster.*.identity, [""], )

}

output "eks_cluster_platform_version" {

description = "The platform version for the cluster."

value = element(concat(aws_eks_cluster.eks_cluster.*.platform_version, [""], ), 0)

}

output "eks_cluster_status" {

description = "TThe status of the EKS cluster. One of CREATING, ACTIVE, DELETING, FAILED."

value = element(concat(aws_eks_cluster.eks_cluster.*.status, [""], ), 0)

}

output "eks_cluster_version" {

description = "The Kubernetes server version for the cluster."

value = element(concat(aws_eks_cluster.eks_cluster.*.version, [""], ), 0)

}

output "eks_cluster_certificate_authority" {

description = "Nested attribute containing certificate-authority-data for your cluster."

value = concat(aws_eks_cluster.eks_cluster.*.certificate_authority, [""], )

}

output "eks_cluster_vpc_config" {

description = "Additional nested attributes"

value = concat(aws_eks_cluster.eks_cluster.*.vpc_config, [""], )

}

#---------------------------------------------------

# AWS EKS node group

#---------------------------------------------------

output "eks_node_group_arn" {

description = "Amazon Resource Name (ARN) of the EKS Node Group."

value = element(concat(aws_eks_node_group.eks_node_group.*.arn, [""], ), 0)

}

output "eks_node_group_id" {

description = "EKS Cluster name and EKS Node Group name separated by a colon (:)."

value = element(concat(aws_eks_node_group.eks_node_group.*.id, [""], ), 0)

}

output "eks_node_group_status" {

description = "Status of the EKS Node Group."

value = element(concat(aws_eks_node_group.eks_node_group.*.status, [""], ), 0)

}

output "eks_node_group_resources" {

description = "List of objects containing information about underlying resources."

value = concat(aws_eks_node_group.eks_node_group.*.resources, [""], )

}

#---------------------------------------------------

# AWS OIDC

#---------------------------------------------------

output "cluster_identity_oidc_issuer_arn" {

description = "ARN of oidc issuer"

value = element(concat(aws_iam_openid_connect_provider.oidc.*.arn, [""], ), 0)

}

output "cluster_oidc_issuer_url" {

description = "The URL on the EKS cluster OIDC Issuer"

value = flatten(concat(aws_eks_cluster.eks_cluster[*].identity[*].oidc[0].issuer, [""]))[0]

}

#-----------------------------------------------------------

# Global or/and default variables

#-----------------------------------------------------------

variable "region" {

description = "The region where to deploy this code (e.g. us-east-1)."

default = "us-east-1"

}

#-----------------------------------------------------------

# AWS EKS cluster

#-----------------------------------------------------------

variable "enable" {

description = "Enable creating AWS EKS cluster"

default = true

}

variable "k8s-version" {

default = "1.20"

type = string

description = "Required K8s version"

}

variable "eks_cluster_name" {

description = "Custom name of the cluster."

default = ""

}

variable "eks_cluster_enabled_cluster_log_types" {

description = "(Optional) A list of the desired control plane logging to enable. For more information, see Amazon EKS Control Plane Logging"

default = [ ]

type = list

}

variable "eks_cluster_version" {

description = "(Optional) Desired Kubernetes master version. If you do not specify a value, the latest available version at resource creation is used and no upgrades will occur except those automatically triggered by EKS. The value must be configured and increased to upgrade the version when desired. Downgrades are not supported by EKS."

default = "1.20"

}

#vpc Config

variable "cluster_security_group_id" {

description = "If provided, the EKS cluster will be attached to this security group. If not given, a security group will be created with necessary ingress/egress to work with the workers"

type = list(string)

default = []

}

variable "subnets" {

description = "A list of subnets to place the EKS cluster and workers within."

type = list(string)

default = ["",""]

}

variable "cluster_endpoint_public_access_cidrs" {

description = "List of CIDR blocks which can access the Amazon EKS public API server endpoint."

type = list(string)

default = ["0.0.0.0/16", "0.0.0.0/24"]

}

variable "cluster_endpoint_public_access" {

description = "Indicates whether or not the Amazon EKS public API server endpoint is enabled. When it's set to `false` ensure to have a proper private access with `cluster_endpoint_private_access = true`."

type = bool

default = true

}

variable "cluster_endpoint_private_access" {

description = "Indicates whether or not the Amazon EKS private API server endpoint is enabled."

type = bool

default = false

}

#timeout

variable "cluster_create_timeout" {

description = "Timeout value when creating the EKS cluster."

type = string

default = " "

}

variable "cluster_delete_timeout" {

description = "Timeout value when deleting the EKS cluster."

type = string

default = " "

}

variable "cluster_update_timeout" {

description = "Timeout value when updating the EKS cluster."

type = string

default = " "

}

/*

variable "create_before_destroy" {

description = "lycycle create_before_destroy"

type = bool

default = true

}*/

#---------------------------------------------------

# AWS EKS node group

#---------------------------------------------------

variable "enable_eks_node_group" {

description = "Enable EKS node group usage"

default = true

}

variable "eks_node_group_node_group_name" {

description = "Name of the EKS Node Group."

default = ""

}

variable "eks_node_group_subnet_ids" {

description = "(Required) Identifiers of EC2 Subnets to associate with the EKS Node Group. These subnets must have the following resource tag: kubernetes.io/cluster/CLUSTER_NAME (where CLUSTER_NAME is replaced with the name of the EKS Cluster)."

default = ["",""]

}

#Scaling_config

variable "desired_size" {

default = " "

type = string

description = "Autoscaling Desired node capacity"

}

variable "max_size" {

default = " "

type = string

description = "Autoscaling maximum node capacity"

}

variable "min_size" {

default = " "

type = string

description = "Autoscaling Minimum node capacity"

}

variable "eks_node_group_ami_type" {

description = "(Optional) Type of Amazon Machine Image (AMI) associated with the EKS Node Group. Defaults to AL2_x86_64. Valid values: AL2_x86_64, AL2_x86_64_GPU. Terraform will only perform drift detection if a configuration value is provided."

default = " "

}

variable "eks_node_group_disk_size" {

description = "(Optional) Disk size in GiB for worker nodes. Defaults to 20. Terraform will only perform drift detection if a configuration value is provided."

default = " "

}

variable "eks_node_group_force_update_version" {

description = "(Optional) Force version update if existing pods are unable to be drained due to a pod disruption budget issue."

default = null

}

variable "eks_node_group_instance_types" {

description = "(Optional) Set of instance types associated with the EKS Node Group. Defaults to ['t3.medium']. Terraform will only perform drift detection if a configuration value is provided. Currently, the EKS API only accepts a single value in the set."

default = []

}

variable "eks_node_group_release_version" {

description = "(Optional) AMI version of the EKS Node Group. Defaults to latest version for Kubernetes version."

default = null

}

variable "eks_node_group_version" {

description = "(Optional) Kubernetes version. Defaults to EKS Cluster Kubernetes version. Terraform will only perform drift detection if a configuration value is provided."

default = null

}

#---------------------------------------------------

# commantags variable

#---------------------------------------------------

variable "application" {

type = string

}

variable "name" {

type = string

}

variable "enviroment" {

type = string

}

variable "support" {

type = string

}

variable "creater" {

type = string

}

#---------------------------------------------------

# Iam roles variable

#---------------------------------------------------

variable "permissions_boundary" {

description = "If provided, all IAM roles will be created with this permissions boundary attached."

type = string

default = null

}

variable "create_eks_service_role" {

description = "It will define if the iam role will create or not"

type = bool

default = true

}

#---------------------------------------------------------

# Farget variable

#_____________________________________________________

variable "fargate_subnet" {

description = "subnets of fargate"

}

#---------------------------------------------------

# Oidc variable

#---------------------------------------------------

variable "client_id" {

description = "(Required) Client ID for the OpenID Connect identity provider."

default = " "

type = string

}

variable "identity_provider_config_name" {

description = "(Required) The name of the identity provider config."

default = " "

type = string

}

variable "bucket_name" {

description = "S3 bucket name for backend"

default = " "

type = string

With the code now ready we can define the desired state of our infrastructure and let Terraform do the rest.

terraform plan

Prints the list of all resources being provisioned.

terraform apply

Creates resources as per values of variables defined.

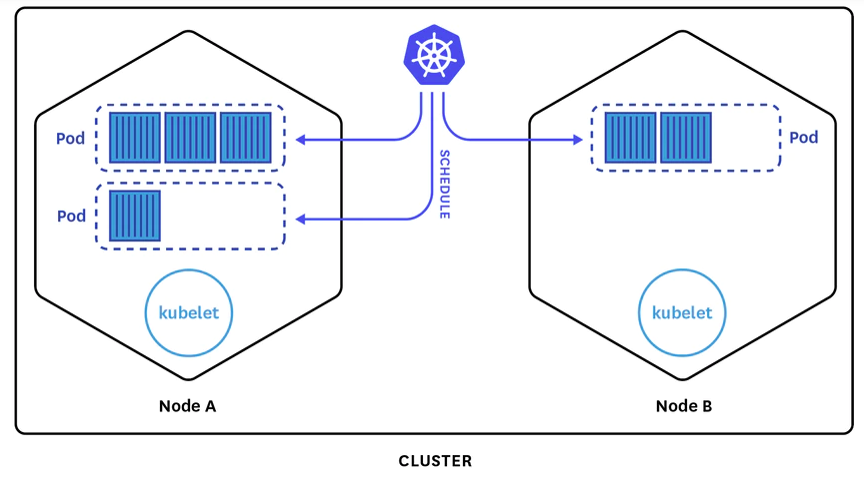

- Two auto-scaling nodes were created in the EKS cluster using t3.medium ec2 instance for the dev environment and t2.2xlarge for production with minimum capacity of 2 and maximum capacity of 5.

- Namespace: ‘Development’ and ‘Production’ created for the respective environments.

Note: Kubernetes allows for the same cluster to be used for different environments like Staging, dev and Prod. However, a separate namespace has to be created for each environment. - Kubernetes Services will ensure Load balancing (By Provisioning ALB) and networking of all objects and routing of web requests is accordingly handled.

- An EFS Volume was allocated to be used as a persistent volume for the cluster. This can be used by the Pods in the cluster for various functionalities of storage and in the current set-up to host the Logs.

3. Deployment of PHP app

a. Docker file:

The following dockerfile was written to build a container image of the client’s application.

<paste from dockerfileKubernetes.txt/>

b. Creating image and uploading to ECR:

Once the container image was built, it was uploaded to AWS ECR from where it would be pulled by the Kubernetes cluster to use for deployment.

Rolling updates were configured so that there would be no downtime during updates to the application.

c. Manifest files for deploying to EKS:

Once the infrastructure was created and container images ready to use, we started working on the manifest files for the Kubernetes cluster.

The following are the files which we used to deploy the application and they can be cloned from our repository on Bit bucket.

git clone https://siddiquimohammed@bitbucket.org/mycloudbuilders/kubernetesmanifests.git

<configMap.yaml/>

<service-acc.yaml/>

<secrets-provider.yaml/>

<secrets.yaml/>

<deployment.yaml/>

<horizontalPodAutoscaling.yaml/>

The application was deployed on Auto-scaling Pods in respective environments of development and production.

- The networking within the cluster is handled by Kubernetes Services which routes web-traffic into the cluster and manages Load-balancing within it.

- The Kubernetes Services allocates virtual IPs to all objects inside the cluster and handles networking inside.

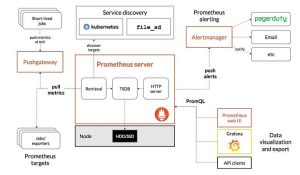

- Prometheus application is deployed into the cluster to ensure the performance is monitored and this is visualized on Grafana Service.

- The following figure shows the routing of web traffic to auto-scaling pods on the configured Worker Nodes.

Secrets and Properties | Secure Storage and Access:

Secrets:

AWS provider installer and Secret store driver have to be installed on the cluster to enable usage of Secrets manager. An IAM Role has to be created and attached to worker nodes so that they can fetch values from AWS Secrets manager. The secrets can be added as key-value pairs on AWS Secrets Manager and the application has to have function ()/method () that will enable it to fetch the values from AWS secrets manager whenever they are required.

AWS Secrets manager can schedule automatic key rotation as defined. When the application makes a call to fetch the values.

Caching can be enabled within the application so that there is efficient working of the code which would also limit the number of API calls to fetch the values from AWS secrets manager.

Properties (configMap)

We wanted to store the properties in S3 Bucket where it is has 256-bit encryption by default. A change of properties on the ConfigMap file would require the application to be re-deployed.

- Monitoring: Application Logs, Metrics collection and Visualization

We used Prometheus for Monitoring and Grafana to create a fantastic visualization of the cluster performance. Alerts were set-up to monitor traffic surges and scaling.

We used Grafana SaaS for this particular project and that made life easier for us and the client’s performance analysts. The Grafana dashboard with minimal monitoring looked something like this.

We have detailed the Monitoring and Visualization aspect in the next part of our series on Application deployment on Kubernetes. Please refer to the link below.

<https:url/for/logging/and/monitoring/>